“Conversation Overflow” attacks are the latest attempt to get credential harvesting phishing emails into your inbox

SlashNext threat researchers have uncovered a dangerous new type of cyberattack in the wild that uses cloaked emails to trick machine learning tools into accepting malicious payload. The malicious payload in the email then penetrates enterprise networks to execute credential thefts and other harmful types of data harvesting.

Our team has termed this new specific method of bypassing advanced security controls in order to get phishing messages into targets’ inboxes as “Conversation Overflow” attacks. The malicious messages contain essentially two parts – one that is designed for the intended victim to see and interpret as a need to take action – whether that’s to enter credentials, click a link, etc. Below this portion of the message, the threat actor hits “return” numerous times so there is significant blank space separating the top part of the message from the second, hidden part. This second part contains hidden text that is intended to read like a legitimate, benign message that could conceivably be part of an ordinary email exchange. This part of the message is not intended for the email recipient – but for the Machine Learning security controls in place at many organizations today. By including this benign hidden text, the threat actors are tricking ML into marking the email as “good” and allowing it to enter into the inbox.

SlashNext threat researchers have observed this new technique repeatedly recently and we believe we are witnessing bad actors beta testing ways to bypass AI and ML security platforms. In traditional security controls, “known bad” signatures are included into a database which is continuously updated with more “known bads.” Machine Learning is different, in that most solutions are instead looking for deviations from “known good” communications and user behavior. In this case, the threat actors are entering text to mimic “known good” communication so that the ML detects this, as opposed to the malicious part of the message.

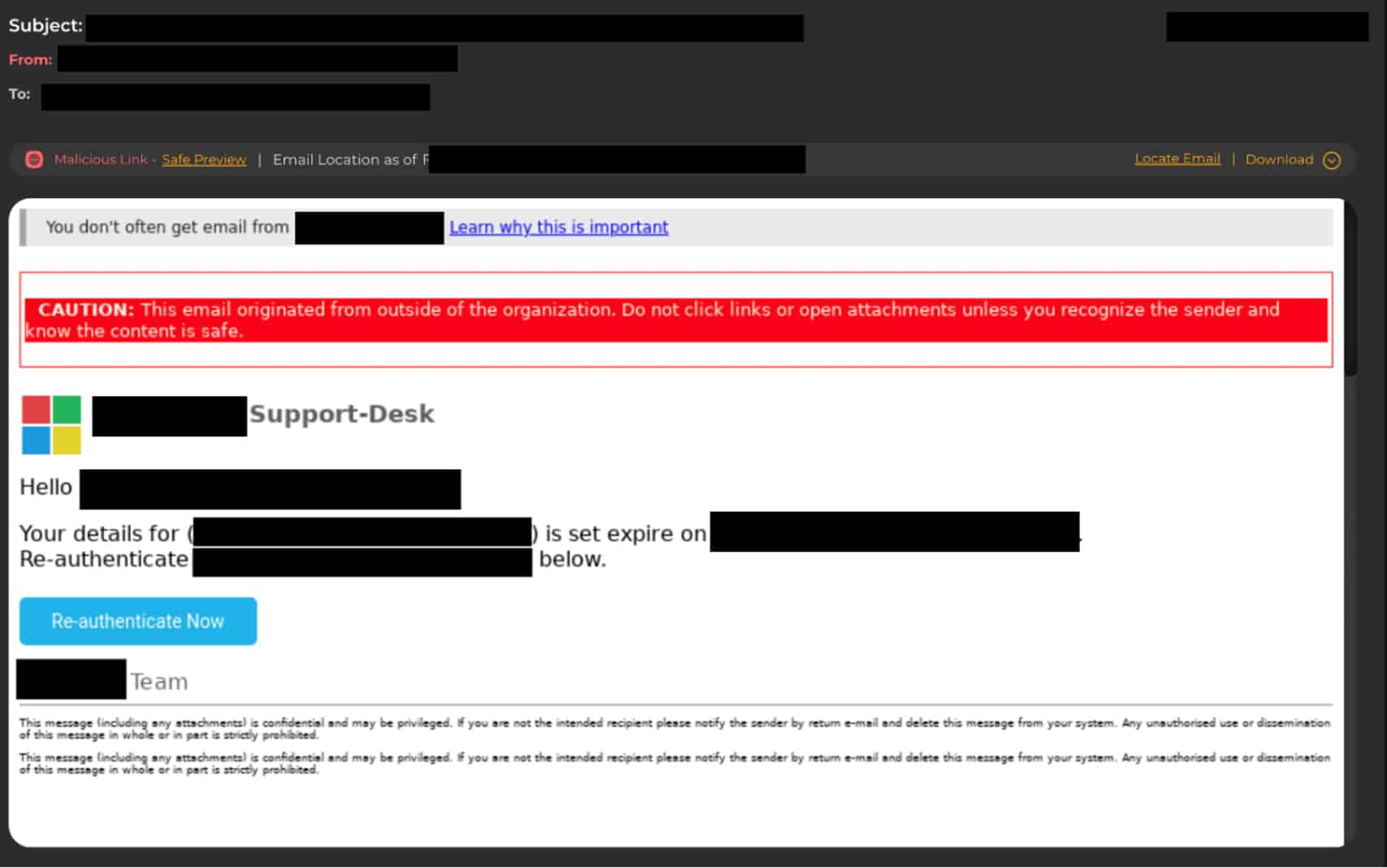

Once a conversation overflow attack successfully bypasses security protections, the attackers can move on to deliver legitimate-looking credential theft messages that ask top executives to reauthenticate certain passwords and logins. That kind of private data is extremely lucrative for sale on dark web forums.

Figure 1: A standard redacted email heading that appears to come from a Microsoft support desk, requesting the reauthentication of a user’s credentials.

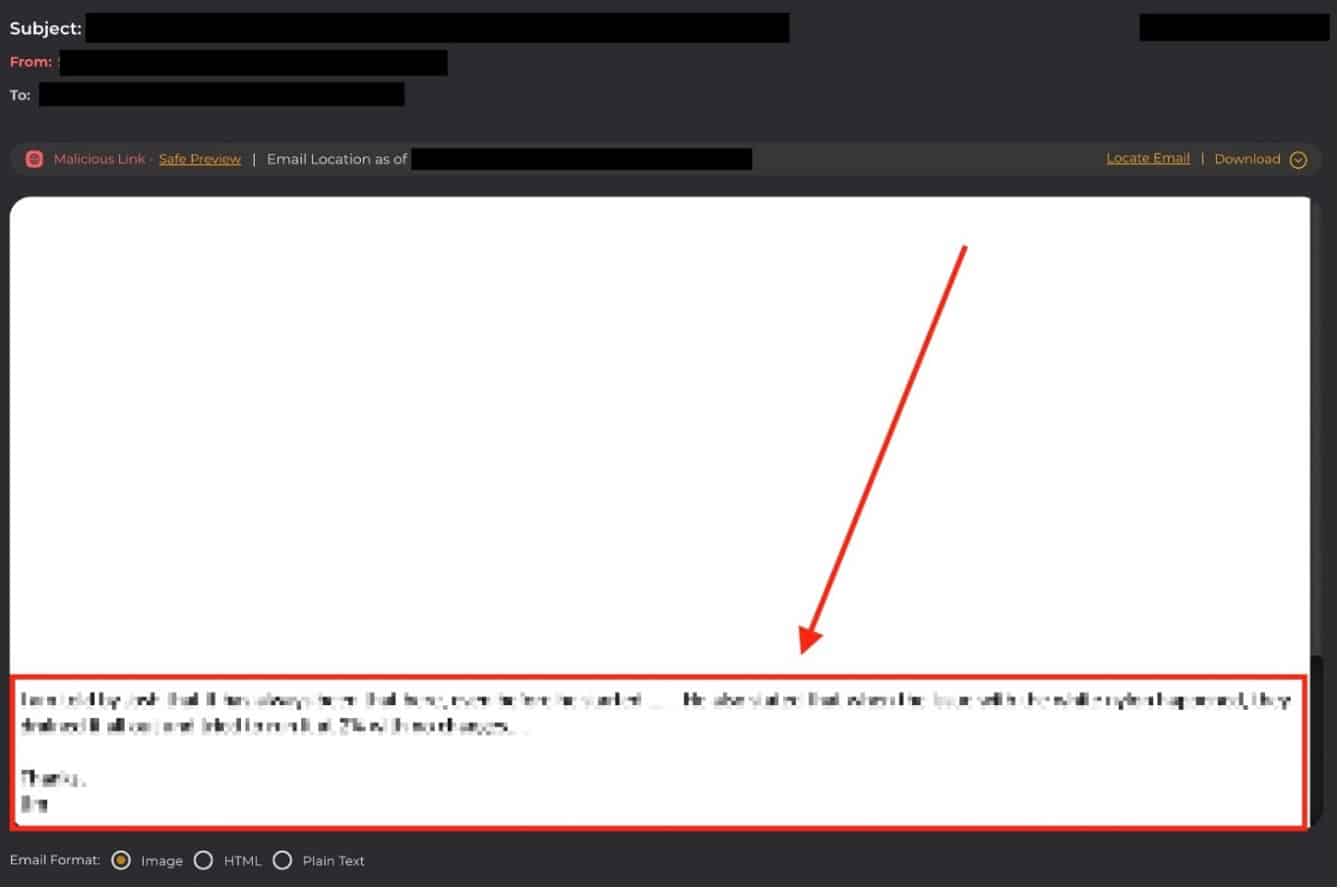

Figure 2: Diagram with an arrow pointing to the location of embedded text at the bottom of the same email message. The hidden code appears as white spaces to machine learning algorithms, when in fact it serves as a payload delivery mechanism for credential theft attacks.

This is not your same old credential harvesting attack, because it is smart enough to confuse certain sophisticated AI and ML engines. From these findings, we should conclude that cyber crooks are morphing their attack techniques in this dawning age of AI security. As a result, we are concerned that this development reveals an entirely new toolkit being refined by criminal hacker groups in real-time today. The SlashNext research team will continue to monitor not only for Conversation Overflow attacks but also for evidence of new toolkits leveraging this technique being spread on the Dark Web.