Deepfake social engineering scams have become an increasingly scary trend among cybercriminals to socially engineer victims into submission. The threat actors are using Artificial Intelligence (AI) and Machine Learning (ML) voice cloning tools to disperse misinformation for cybercriminal scams. It doesn’t take much for an audio recording of a voice – only about 10 to 20 seconds – to make a decent reproduction. The audio clip extracts unique details of the victim’s voice. A threat actor can simply call a victim and pretend to be a salesperson, for example, to capture enough of the audio to make it work.

Deepfakes can be used for harmless or creative purposes, such as generating realistic CGI characters in movies and video games, or for malicious purposes, such as misinformation, propaganda, or defamatory content dispersions.

Deepfakes have become a growing concern in recent years, as technology has become more accessible and easier to use, and the potential for abuse has become clearer. Some governments and technology companies have taken steps to develop tools and policies to detect and combat deepfakes, but the issue is still a complex and evolving one.

Here are some actual deepfake audio recordings – some humorous, some cool, but all that in some form can be used maliciously:

A new Drake x The Weeknd track just blew up – but it’s an AI fake. (TechCrunch)

CNN reporter calls his parents using a deepfake voice. (CNN)

No, Tom Cruise isn’t on TikTok. It’s a deepfake. (CNN)

Twenty of the best deepfake examples that terrified and amused the internet. (Creative Bloq)

Recent cybercriminals use deepfakes for fraud, extortion, defamation, election interference, cyberbullying and more. Here are a few recent examples.

A deepfake audio clip of a CEO recently tricked an energy firm based in the UK into transferring $243,000 to a fraudulent account. The AI-generated clip socially engineered the company’s accountant into thinking the CEO had authorized the transfer.

In Dark Reading, Contributing Writer Ericka Chickowski outlined how criminals use deepfake videos to interview for remote work. She noted that the positions applied for by the threat actors were positions with some level of corporate access to sensitive data or systems. As for motives, she mentioned that “security experts believe one of the most obvious goals in deepfaking one’s way through a remote interview is to get a criminal into a position to infiltrate an organization for anything from corporate espionage to common theft.”

Last year, we saw attacks on Twitter and Discord victims in a clever twist on the traditional social engineering scheme to steal credentials. The social engineering scams used fear and outrage to move victims to act quickly without taking too much time to think whether they were in a phishing scam. The users of Twitter and Discord were motivated to resolve an issue that could have had impact on their status, business, or entertainment, which is why this type of social engineering phishing was so effective.

A few of the most common usage of deepfakes include:

- CEO Fraud: An attacker creates a deepfake video or audio recording of a company CEO or other high-level executive, and then uses this fake content to instruct employees to transfer money to a fraudulent account or disclose sensitive information.

- Customer Service Scams: An attacker creates a deepfake of a customer service representative from a trusted company, and then uses this fake content to trick customers into providing their login credentials or other personal information.

- Business Compromise: An attacker creates a deepfake of a vendor or partner company representative, and then uses this fake content to request changes to payment details or other sensitive information, often with follow-up Business Email Compromise (BEC) emails or Business Text Compromise (BTC) smishing attacks.

Other malicious usage of deepfakes include:

- Spreading Misinformation: Deepfakes can be used to create fake news stories or manipulate real news footage, leading to confusion, distrust, and potentially dangerous situations.

- Discrediting People: Deepfake videos or images can damage reputation and credibility by showing individuals engaging in what looks like inappropriate or illegal activities.

- Blackmail: Deepfakes can be used to ostensibly show people engaging in illegal or embarrassing activities, which can then be used to blackmail or extort them.

- Political Manipulation: Fake videos or images of candidates or supporters can be used to manipulate political campaigns or elections.

Deepfakes and Social Media

Social media platforms are common targets of phishing campaigns and deepfakes, using psychological manipulation (social engineering) to encourage victims to disclose confidential login credentials. The pilfered information is then used by malicious actors to hijack the user’s social media accounts, or even gain access to their bank accounts. But more importantly for enterprises, successful social media attacks on their employees can open the door to infiltration to the company network via the user’s infected device or abused credentials. This means companies need a BYOD (Bring Your Own Device) strategy that includes multichannel phishing and malware protection to protect social, gaming, and all messaging apps.

Preventing Scams and Using Generative AI to Battle Deepfakes

There are a few obvious steps you can take to avoid deepfake scams. Verify the information by calling a known and legitimate number. A cybercriminal on the spoofed number won’t receive the call back. Think before reacting to the “social engineering” caller. Does it really make sense that your boss is asking you to put thousands of dollars into a financial account?

In addition, it’s important to implement training around deepfakes, but it’s also critical to know that training isn’t foolproof. Training should include social engineering scams to demonstrate how personal interactions, such as social media interactions, can impact work life.

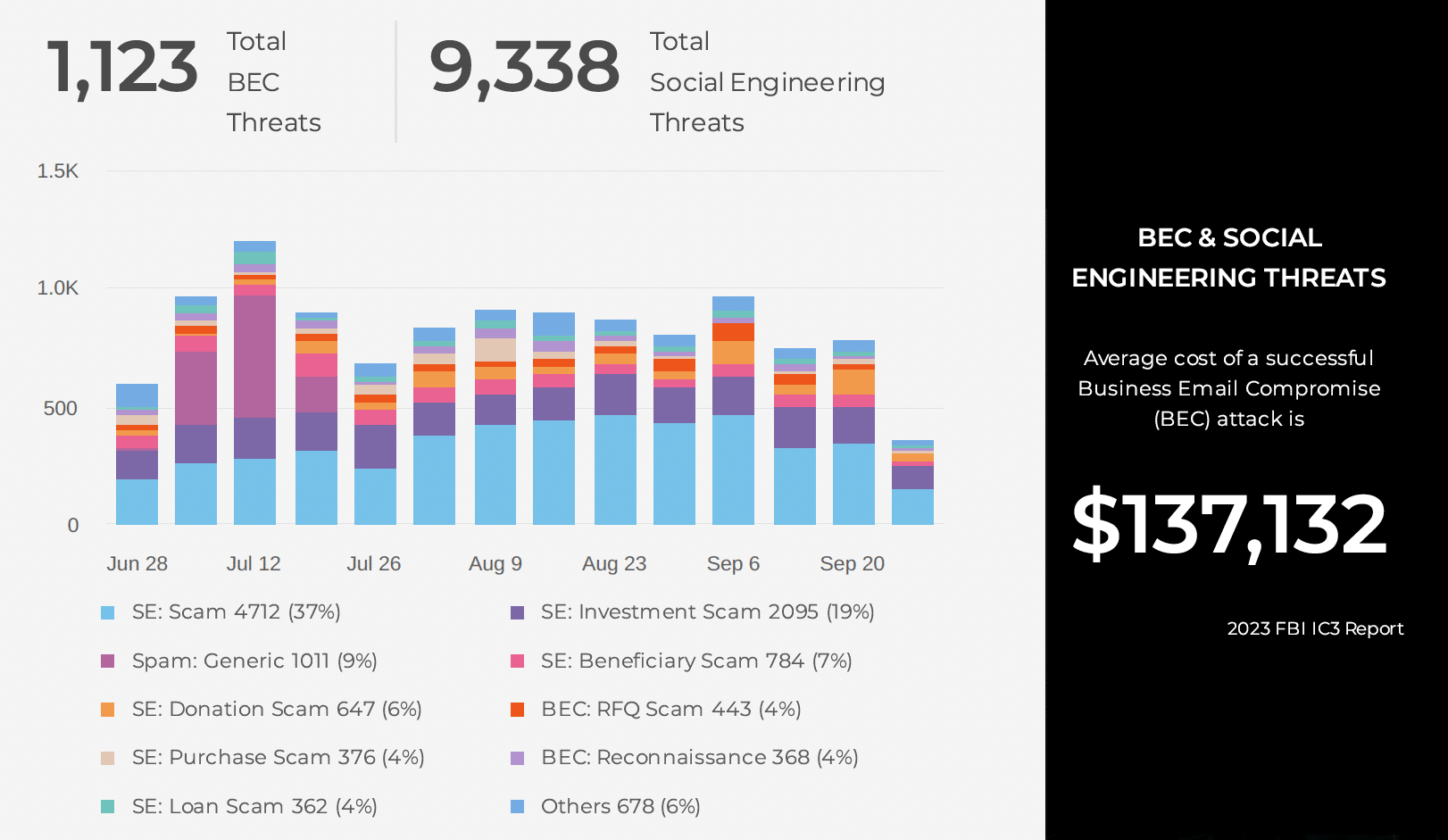

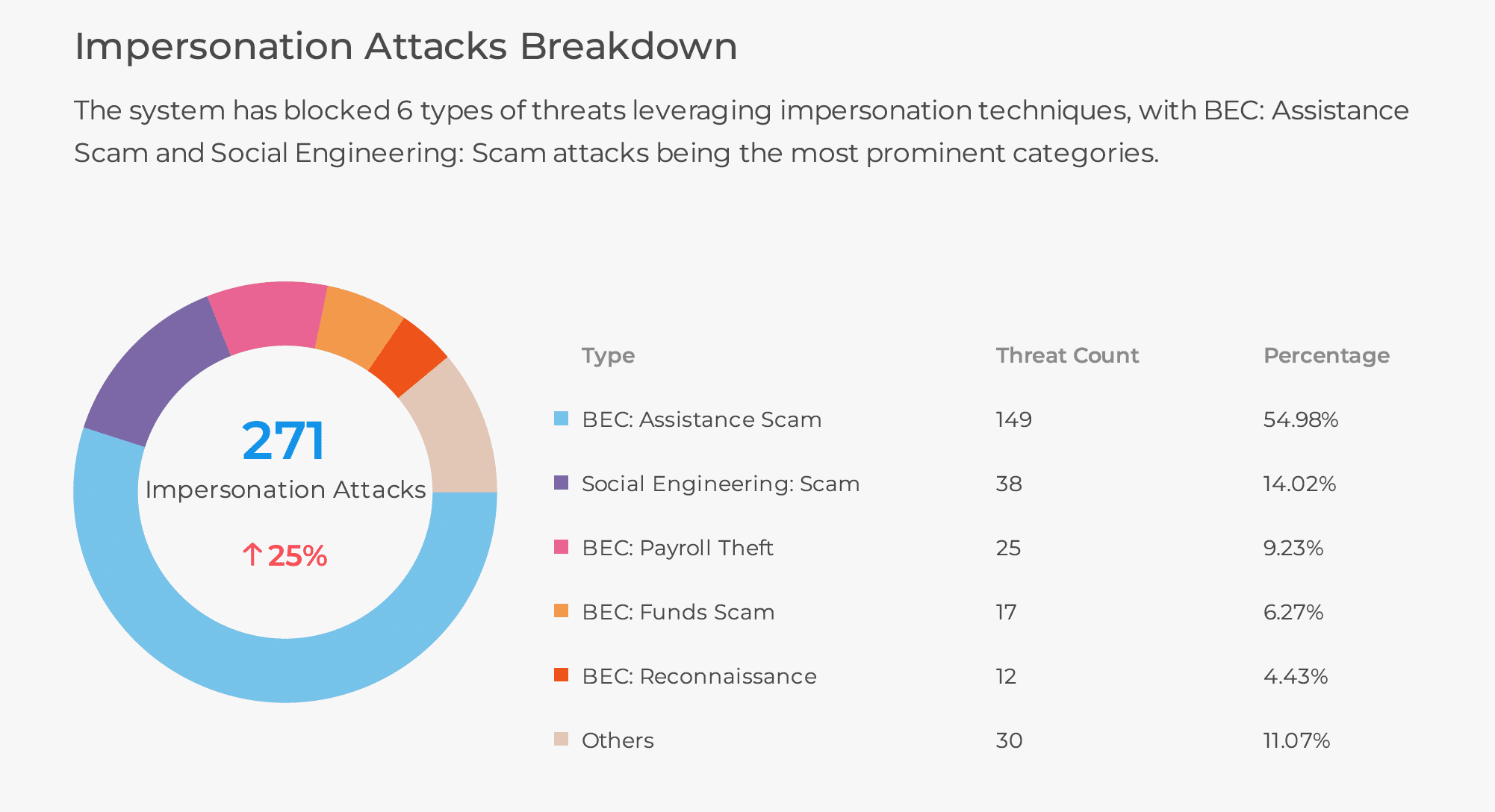

As mentioned, deepfakes can eventually lead to other types of social engineering types, including the most popular – Business Email Compromise (BEC) and Business Text Compromise (BEC). SlashNext has developed generative AI into its product over the last few years to use the same type of cloning technology used by deepfakes to stop tomorrow’s threats today.

HumanAI™ provides zero-hour protection using generative AI, relationship graphs, natural language processing (NLP), and computer vision to stop and remediate threats with a 99.9% detection rate and a one in one million false positive. Here’s how our generative AI works.

A threat actor sends an original email such as this:

I have written you multiple times to remind you that you owed us $3325.32. Unfortunately, the invoice sent on August 1st is more than 14 days late. If you have lost or deleted the original invoice, here is a copy. Please send the payment immediately.

Then, the threat actor follows up with a BEC generative AI clone, such as this:

To make you aware, you owe us a total of $3325.32. In case you misplaced the original invoice sent on 08/01, I have included a copy here for your convenience. To the best of your ability, kindly pay the money as soon as you can.

And so on.

SlashNext BEC generative AI immediately kicks in and extracts topic, intent, emotions and style of a malicious email, and exhausts all possible ways a human can write a similar email (semantical clones) – providing a rich data set for ML training, testing and runtime predictions. In essence, SlashNext stops tomorrow’s threats today.

As mentioned, and to summarize, SlashNext offers real-time complete AI-powered integrated cloud security for email, mobile and web messaging apps for businesses. The technology uses relationship graphs & contextual analysis, BEC Generative AI Augmentation, Natural Language Processing, Computer Vision Recognition, File Attachment Inspection, and Sender Impersonation Analysis. These capabilities work together to thwart an entire new generation of threat actors. SlashNext sources an enormous database for zero-hour detections to identify more than 700,000 new threats per day with 99.9% detection rate.

Schedule a Demo or Test Your Security Efficacy in Observability Mode in Minutes

To see a personalized demo and learn how our product stops BEC, click here to schedule a demo or compare SlashNext HumanAI™ with your current email security for 30 days using our 5-min setup Observability Mode.