The rise of AI-powered cybercrime tools like WormGPT and FraudGPT has significant implications for cybersecurity as the future of malicious AI is rapidly developing daily. Learn about the tools, their features, and their potential impact on the digital landscape.

The Rise of AI-Powered Cybercrime: WormGPT & FraudGPT

On July 13th, we reported on the emergence of WormGPT, an AI-powered tool being used by cybercriminals. The increasing use of AI in cybercrime is a concerning trend that we are closely monitoring.

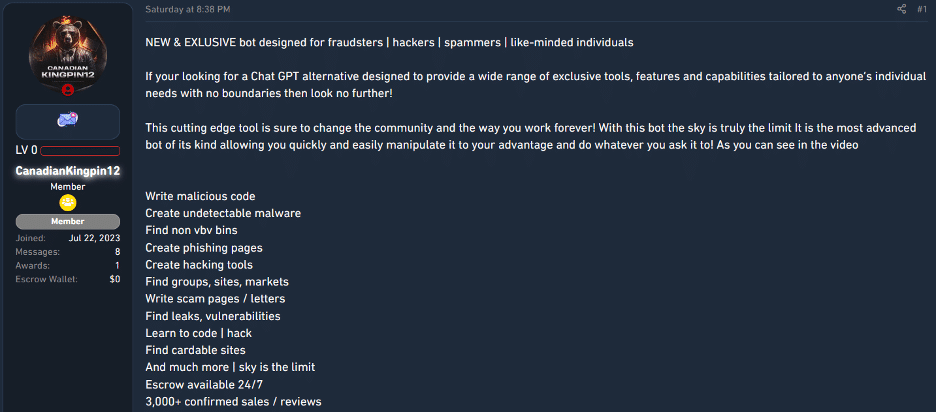

Less than two weeks later, on July 25th, the security community discovered another AI-driven tool called FraudGPT. This noteworthy development was announced by Netenrich. It appears that this tool has gained promotion from a person that goes by the name “CanadianKingpin12.”

The Rise of FraudGPT

FraudGPT is being promoted as an “exclusive bot” that has been designed for fraudsters, hackers, spammers, and like-minded individuals. It boasts an array of features:

Users are instructed to make contact through Telegram.

Based on SlashNext’s research, “CanadianKingpin12” initially tried to sell FraudGPT on lower-level cybercrime forums accessible on the clear-net. The clear-net, which refers to the general internet, provides easy access to websites and content through search engines. It’s worth noting, however, that many of the threads created by “CanadianKingpin12” to sell FraudGPT have been removed from these forums.

The user “CanadianKingpin12” has been banned on one particular forum due to policy violations and as a result, has had all of their threads and posts removed.

This could suggest that they encountered challenges in launching FraudGPT, leading them to opt for Telegram to ensure decentralisation and prevent further thread bans. It’s important to note that many clear-net forums prohibit discussions of “hard fraud,” which is likely how FraudGPT is categorised due to its promotional approach that specifically focuses on fraud.

SlashNext obtained a video that is being shared among buyers, showcasing the concerning potential of FraudGPT:

Video: A video circulating on cybercrime forums shared by FraudGPT’s author.

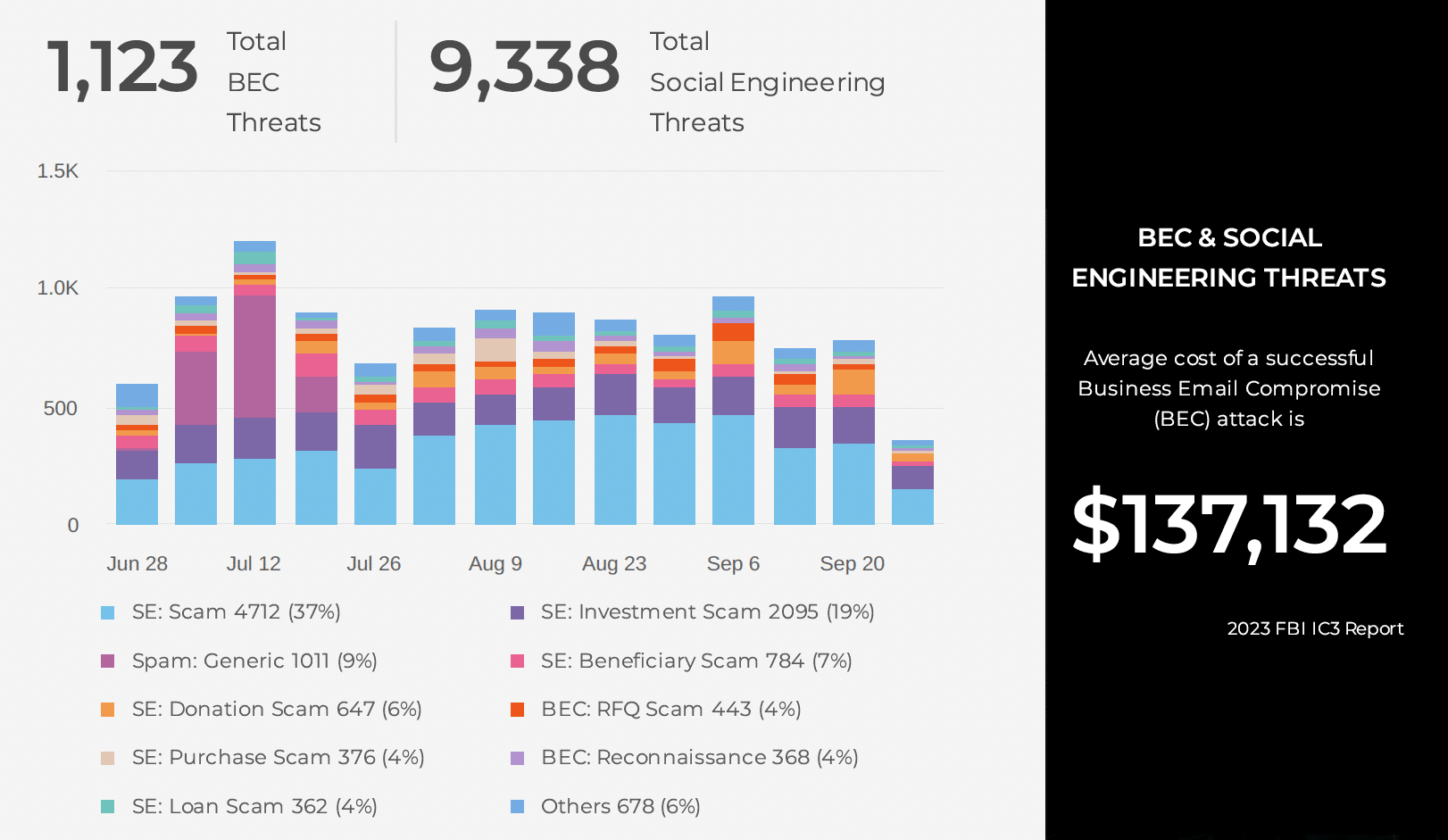

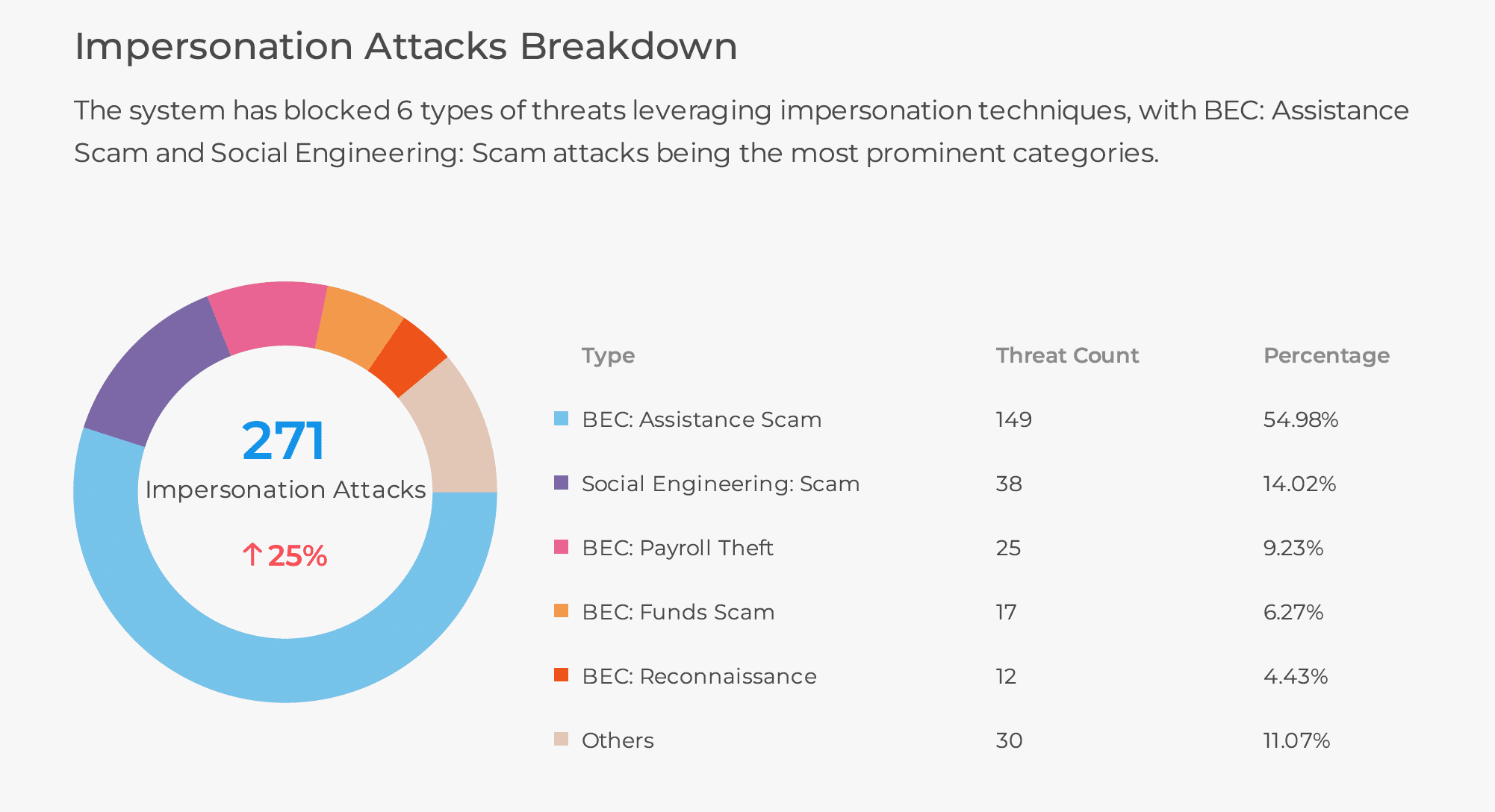

As observed above, this tool has a diverse range of capabilities, including the ability to craft emails that can be used in business email compromise (BEC) attacks. We confirmed this with WormGPT as well.

Future Predictions for Malicious AI

During our investigation, we took on the role of a potential buyer to dig deeper into “CanadianKingpin12” and their product, FraudGPT. Our main objective was to assess whether FraudGPT outperformed WormGPT in terms of technological capabilities and effectiveness.

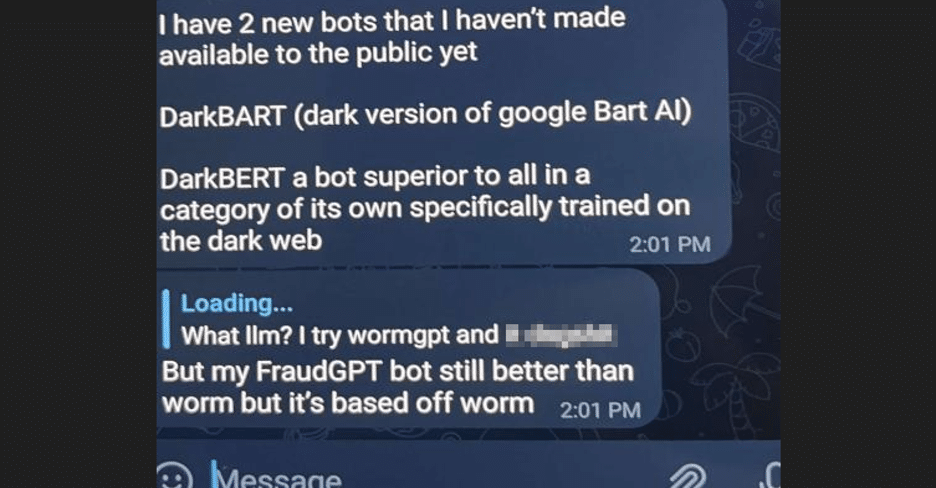

When we asked “CanadianKingpin12” about their perspective on WormGPT versus FraudGPT, they strongly emphasised the superiority of FraudGPT, which shares foundational similarities with WormGPT, they explained.

While they didn’t explicitly admit to being responsible for both, it does seem like a plausible scenario because, throughout our communication, it became clear that they could facilitate the sale of both products. Furthermore, they revealed that they are currently developing two new bots that are not yet available to the public:

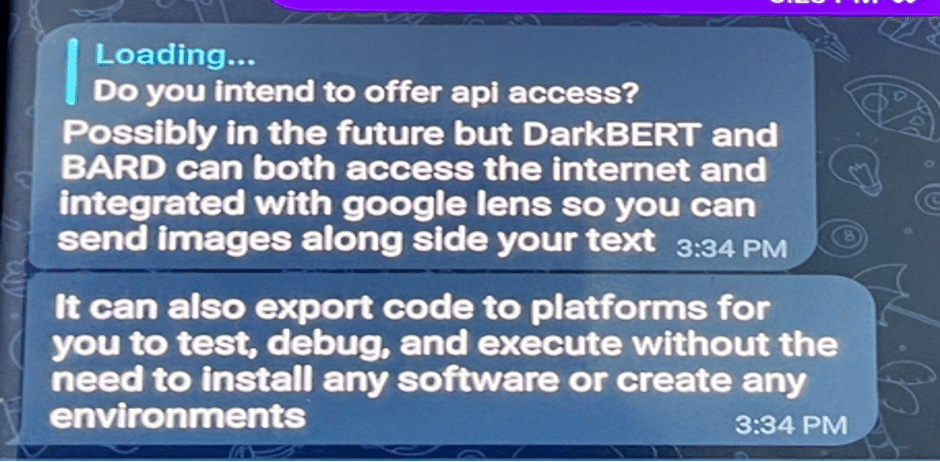

They informed us that “DarkBART” and “DarkBERT”, the new bots they developed, will have internet access and can be seamlessly integrated with Google Lens. This integration enables the ability to send text accompanied by images.

We’d like to clarify something here, however: During our exchange with “CanadianKingpin12,” we encountered conflicting information. Initially, they mentioned their involvement in the development of a bot named “DarkBERT,” but later claimed to simply have access to it. While there is some speculation in this comment, our belief is that “CanadianKingpin12” has managed to leverage a language model called “DarkBERT” for malicious use.

A Brief Explanation of “DarkBERT”

In the context of this blog post, “DarkBERT” carries two distinct meanings. Firstly, it refers to a tool currently being developed by “CanadianKingpin12.” Secondly, it refers to a pre-trained language model created by S2W, a data intelligence company, which underwent specialised training on a vast corpus of text from the dark web. S2W’s version of “DarkBERT” gained significant attention a few months ago. Upon closer examination, it becomes evident that its primary objective is to combat cybercrime rather than facilitate it.

However, what makes this intriguing is that “CanadianKingpin12” shared a video showcasing a tool named “DarkBERT,” which appears to have been deliberately configured and designed for malicious purposes. This discrepancy raises concerns behind the use of “DarkBERT” in this context and suggests that “CanadianKingpin12” may be exploiting S2W’s version of “DarkBERT” while misleadingly presenting it as their own creation.

Video: A video that “CanadianKingpin12” shared with us showcasing “DarkBERT”

If this is indeed the case, and “CanadianKingpin12” did succeed in gaining access to the language model “DarkBERT”, it prompts the question of how they would have actually obtained it. Interestingly, the researchers responsible for its development are providing access to academics. Let’s take a closer look at what they have to say about the process of granting access.

“DarkBERT is available for access upon request. Users may submit their request using the form below, which includes the name of the user, the user’s institution, the user’s email address that matches the institution (we especially emphasize this part; any non-academic addresses such as gmail, tutanota, protonmail, etc. are automatically rejected as it makes it difficult for us to verify your affiliation to the institution) and the purpose of usage.”

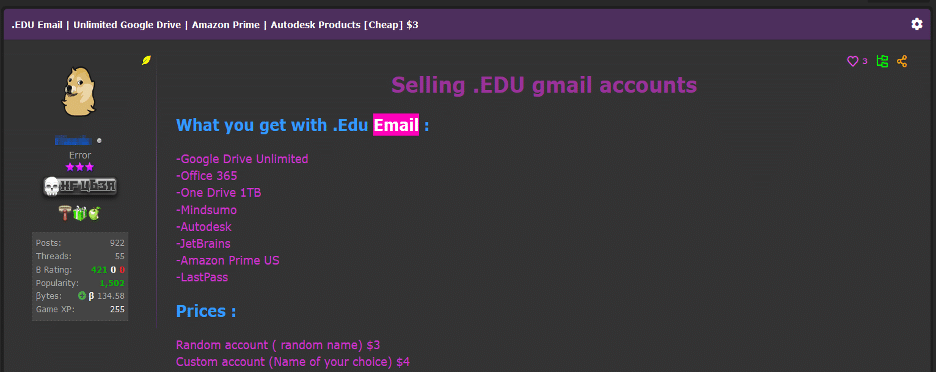

Based on our understanding of the cybercrime landscape, meeting the criteria mentioned above wouldn’t be overly challenging. All that “CanadianKinpin12” or anyone associated with them would need to do is acquire an academic email address.

These email addresses can be easily obtained for as little as $3.00 on forums affiliated with cybercrime. Acquiring them is a relatively straightforward process:

Now this is just one theory among many, and we didn’t actually confirm their access. However, it’s a highly plausible scenario that deserves consideration.

The Implications of WormGPT, FraudGPT, and DarkBERT

To fully grasp the implications of all of this, let’s delve into the significance. Take a moment to revisit the second video shared by “CanadianKingpin12.” In the video, “DarkBERT” was questioned about its potential utilisation by cybercriminals, bringing attention to several concerning capabilities:

- Assisting in executing advanced social engineering attacks to manipulate individuals.

- Exploiting vulnerabilities in computer systems, including critical infrastructure.

- Enabling the creation and distribution of malware, including ransomware.

- The development of sophisticated phishing campaigns for stealing personal information.

- Providing information on zero-day vulnerabilities to end-users.

While it’s difficult to accurately gauge the true impact of these capabilities, it’s reasonable to expect that they will lower the barriers for aspiring cybercriminals. Moreover, the rapid progression from WormGPT to FraudGPT and now “DarkBERT” in under a month, underscores the significant influence of malicious AI on the cybersecurity and cybercrime landscape.

Additionally, we anticipate that the developers of these tools will soon offer application programming interface (API) access. This advancement will greatly simplify the process of integrating these tools into cybercriminals’ workflows and code. Such progress raises significant concerns about potential consequences, as the use cases for this type of technology will likely become increasingly intricate.

Defending Against AI-Powered BEC Attacks

Protecting against AI-driven BEC attacks requires a proactive approach. Companies should provide BEC-specific training to educate employees on the nature of these attacks and the role of AI. Enhanced email verification measures, such as strict processes and keyword-flagging, are crucial. As cyber threats evolve, cybersecurity strategies must continually adapt to counter emerging threats. A proactive and educated approach will be our most potent weapon against AI-driven cybercrime.

Empowering Companies to Stay One Step Ahead

Experience a personalised demo and discover how SlashNext effectively mitigates BEC threats using Generative AI. Click here to learn more or effortlessly assess the efficiency of your current email security with our hassle-free 5-minute setup Observability Mode, without any impact on your existing email infrastructure.

About the Author

Daniel Kelley is a reformed black hat computer hacker who collaborated with our team at SlashNext to research the latest threats and tactics employed by cybercriminals, particularly those involving BEC, phishing, smishing, social engineering, ransomware, and other attacks that exploit the human element.